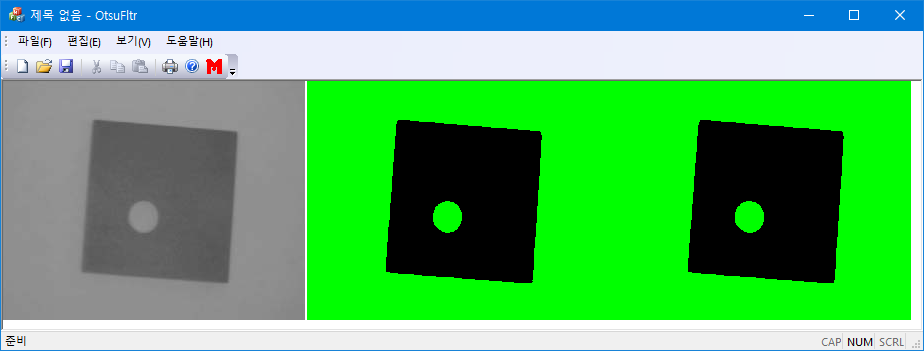

이미지를 균일한 컬러 영역으로 분할하는 것은 압축이나 segmentation 등에 매우 유용하다. 4개의 child node 가운데 parent node와 차이가 많이 나지 않는 것은 부분적으로 parent node에 merge 시키는 방법도 있다.

#include "QuadTree.h"

#include <queue>

#include <math.h>

QuadNode::QuadNode() {

for (int i = 0; i < 4; i++) children[i] = NULL;

rect = CRect(0, 0, 0, 0);

hasChildren = false;

mean = -1;

stdev = 0;

}

QuadNode::QuadNode(CRect& rc) {

for (int i = 0; i < 4; i++) children[i] = NULL;

rect = rc;

hasChildren = false;

mean = -1;

stdev = 0;

}

void QuadNode::Subdivide() {

hasChildren = true;

children[0] = new QuadNode(CRect(rect.TopLeft(), rect.CenterPoint()));

children[1] = new QuadNode(CRect(rect.left, rect.CenterPoint().y, rect.CenterPoint().x, rect.bottom));

children[2] = new QuadNode(CRect(rect.CenterPoint(), rect.BottomRight()));

children[3] = new QuadNode(CRect(rect.CenterPoint().x, rect.top, rect.right, rect.CenterPoint().y));

}

// quadtree에 할당된 메모리를 정리함; root node는 별도로 정리.

void QuadNode::Clear() {

if (this->hasChildren)

for (int i = 0; i < 4; i++)

if (children[i]) {

children[i]->Clear();

delete children[i];

}

}

QuadTree::QuadTree(CRect rc, CRaster& image) {

rect = rc & image.GetRect(); // rect는 이미지 안에.

raster = image;

}

void QuadTree::Build() {

root = new QuadNode();

root->rect = this->rect;

std::queue<QuadNode *> TreeQueue;

TreeQueue.push(root);

while (!TreeQueue.empty()) {

QuadNode* node = TreeQueue.front();

TreeQueue.pop();

CRect rc = node->rect;

if ((rc.Width() > 2 && rc.Height() > 2) && !IsHomogenous(node)) {

node->Subdivide();

for (int i = 0; i < 4; i++)

TreeQueue.push(node->children[i]);

}

}

}

// subdivision 조건: 편차, 최대-최소 차이등의 criteria를 사용할 수 있다.

#define THRESH 15

bool QuadTree::IsHomogenous(QuadNode* node) {

CRect rc = node->rect;

double s = 0, ss = 0;

int minval = 255, maxval = 0;

for (int y = rc.top; y < rc.bottom; y++) {

BYTE *p = (BYTE *)raster.GetLinePtr(y) + rc.left;

for (int x = rc.left; x < rc.right; x++) {

int a = *p++;

s += a; ss += a * a;

maxval = max(maxval, a); minval = min(minval, a);

}

}

int n = rc.Width() * rc.Height();

node->mean = s / n;

node->stdev = sqrt((ss - s * s / n) / n);

return (node->stdev >= THRESH) ? false: true;

//return (maxval - minval) > THRESH ? false: true;

};

// leaf node를 찾아 list에 넣음;

void QuadTree::FindLeaf(QuadNode* node) {

if (node == NULL) return;

if (!node->hasChildren) {

LeafList.push_back(node);

return;

}

for (int i = 0; i < 4; i++)

FindLeaf(node->children[i]);

}사용 예:

void test_main(CRaster& raster, CRaster& out) {

ASSERT(raster.GetBPP() == 8); // gray input;

QuadTree qtree(raster.GetRect(), raster);

qtree.Build();

qtree.FindLeaf(qtree.root);

out.SetDimensions(raster.GetSize(), 24); //RGB-color;

// segment image;

for (int i = 0; i < qtree.LeafList.size(); i++) {

QuadNode *node = qtree.LeafList[i];

int a = int(node->mean + .5);

FillRegion(out, node->rect, RGB(a, a, a)); //gray average;

// draw rectangle with red color;

DrawRectangle(out, node->rect, RGB(0, 0, 0xFF));

}

qtree.root->Clear();

delete qtree.root;

}'Image Recognition > Fundamental' 카테고리의 다른 글

| Moment-preserving Thresholding (0) | 2022.05.29 |

|---|---|

| Minimum Cross Entropy Thresholding (0) | 2022.05.29 |

| Harris Corner Detector (0) | 2022.04.07 |

| Valley emphasis Otsu threshold (0) | 2022.02.23 |

| Image rotation by FFT (0) | 2022.02.18 |